Mock sample for your project: Tisane API Documentation

Integrate with "Tisane API Documentation" from tisane.ai in no time with Mockoon's ready to use mock sample

Tisane API Documentation

tisane.ai

Version: 1.0.0

Speed up your application development by using "Tisane API Documentation" ready-to-use mock sample. Mocking this API will allow you to start working in no time. No more accounts to create, API keys to provision, accesses to configure, unplanned downtime, just work.

It also improves your integration tests' quality and reliability by accounting for random failures, slow response time, etc.

Description

Tisane is a natural language processing library, providing:

standard NLP functionality

special functions for detection of problematic or abusive content

low-level NLP like morphological analysis and tokenization of no-space languages (Chinese, Japanese, Thai)

Tisane has monolithic architecture. All the functions are exposed using the same language models and the same analysis process invoked using the POST /parse method. Other methods in the API are either wrappers based on the process, helper methods, or allow inspection of the language models.

The current section of the documentation describes the two structures used in the parsing & transformation methods.

Getting Started

This guide describes how to setup your Tisane account. The steps you need to complete are as follows:

Step 1 – Create an Account

Step 2 – Save Your API Key

Step 3 – Integrate the API

Step 1 – Create an Account

Navigate to Sign up to Tisane API. The free Community Plan allows up to 50,000 requests but comes with a limitation of 10 requests per minute.

Step 2 - Save Your API Key

You will need the API key to make requests. Open your Developer Profile to find your API keys.

Step 3 - Integrate with the API

In summary, the POST /parse method has 3 attributes: content, language, and settings. All 3 attributes are mandatory.

For example:

{"language": "en", "content": "hello", "settings": {}}

Read on for more info on the response and the settings specs. The method doc pages contain snippets of code for your favorite languages and platforms.

Response Reference

The response of the POST /parse method contains several sections displayed or hidden according to the settings provided.

The common attributes are:

text (string) - the original input

reduced_output (boolean) - if the input is too big, and verbose information like the lexical chunk was requested, the verbose information will not be generated, and this flag will be set to true and returned as part of the response

sentiment (floating-point number) - a number in range -1 to 1 indicating the document-level sentiment. Only shown when document_sentiment setting is set to true.

signal2noise (floating-point number) - a signal to noise ranking of the text, in relation to the array of concepts specified in the relevant setting. Only shown when the relevant setting exists.

Abusive or Problematic Content

The abuse section is an array of detected instances of content that may violate some terms of use. NOTE: the terms of use in online communities may vary, and so it is up to the administrators to determine whether the content is indeed abusive. For instance, it makes no sense to restrict sexual advances in a dating community, or censor profanities when it's accepted in the bulk of the community.

The section exists if instances of abuse are detected and the abuse setting is either omitted or set to true.

Every instance contains the following attributes:

offset (unsigned integer) - zero-based offset where the instance starts

length (unsigned integer) - length of the content

sentence_index (unsigned integer) - zero-based index of the sentence containing the instance

text (string) - fragment of text containing the instance (only included if the snippets setting is set to true)

tags (array of strings) - when exists, provides additional detail about the abuse. For instance, if the fragment is classified as an attempt to sell hard drugs, one of the tags will be harddrug_.

type (string) - the type of the abuse

severity (string) - how severe the abuse is. The levels of severity are low, medium, high, and extreme

explanation (string) - when available, provides rationale for the annotation; set the explain setting to true to enable.

The currently supported types are:

personal_attack - an insult / attack on the addressee, e.g. an instance of cyberbullying. Please note that an attack on a post or a point, or just negative sentiment is not the same as an insult. The line may be blurred at times. See our Knowledge Base for more information.

bigotry - hate speech aimed at one of the protected classes. The hate speech detected is not just racial slurs, but, generally, hostile statements aimed at the group as a whole

profanity - profane language, regardless of the intent

sexual_advances - welcome or unwelcome attempts to gain some sort of sexual favor or gratification

criminal_activity - attempts to sell or procure restricted items, criminal services, issuing death threats, and so on

external_contact - attempts to establish contact or payment via external means of communication, e.g. phone, email, instant messaging (may violate the rules in certain communities, e.g. gig economy portals, e-commerce portals)

adult_only - activities restricted for minors (e.g. consumption of alcohol)

mental_issues - content indicative of suicidal thoughts or depression

spam - (RESERVED) spam content

generic - undefined

Sentiment Analysis

The sentiment_expressions section is an array of detected fragments indicating the attitude towards aspects or entities.

The section exists if sentiment is detected and the sentiment setting is either omitted or set to true.

Every instance contains the following attributes:

offset (unsigned integer) - zero-based offset where the instance starts

length (unsigned integer) - length of the content

sentence_index (unsigned integer) - zero-based index of the sentence containing the instance

text (string) - fragment of text containing the instance (only included if the snippets setting is set to true)

polarity (string) - whether the attitude is positive, negative, or mixed. Additionally, there is a default sentiment used for cases when the entire snippet has been pre-classified. For instance, if a review is split into two portions, What did you like? and What did you not like?, and the reviewer replies briefly, e.g. The quiet. The service, the utterance itself has no sentiment value. When the calling application is aware of the intended sentiment, the default sentiment simply provides the targets / aspects, which will be then added the sentiment externally.

targets (array of strings) - when available, provides set of aspects and/or entities which are the targets of the sentiment. For instance, when the utterance is, The breakfast was yummy but the staff is unfriendly, the targets for the two sentiment expressions are meal and staff. Named entities may also be targets of the sentiment.

reasons (array of strings) - when available, provides reasons for the sentiment. In the example utterance above (The breakfast was yummy but the staff is unfriendly), the reasons array for the staff is ["unfriendly"], while the reasons array for meal is ["tasty"].

explanation (string) - when available, provides rationale for the sentiment; set the explain setting to true to enable.

Example:

Context-Aware Spelling Correction

Tisane supports automatic, context-aware spelling correction. Whether it's a misspelling or a purported obfuscation, Tisane attempts to deduce the intended meaning, if the language model does not recognize the word.

When or if it's found, Tisane adds the correctedtext attribute to the word (if the words / lexical chunks are returned) and the sentence (if the sentence text is generated). Sentence-level correctedtext is displayed if words or parses are set to true.

Note that as Tisane works with large dictionaries, you may need to exclude more esoteric terms by using the mingenericfrequency setting.

Note that the invocation of spell-checking does not depend on whether the sentences and the words sections are generated in the output. The spellchecking can be disabled by setting disablespellcheck to true. Another option is to enable the spellchecking for lowercase words only, thus excluding potential proper nouns in languages that support capitalization; to avoid spell-checking capitalized and uppercase words, set lowercasespellcheck_only to true.

Settings Reference

The purpose of the settings structure is to:

provide cues about the content being sent to improve the results

customize the output and select sections to be shown

define standards and formats in use

define and calculate the signal to noise ranking

All settings are optional. To leave all settings to default, simply provide an empty object ({}).

Content Cues and Instructions

format (string) - the format of the content. Some policies will be applied depending on the format. Certain logic in the underlying language models may require the content to be of a certain format (e.g. logic applied on the reviews may seek for sentiment more aggressively). The default format is empty / undefined. The format values are:

review - a review of a product or a service or any other review. Normally, the underlying language models will seek for sentiment expressions more aggressively in reviews.

dialogue - a comment or a post which is a part of a dialogue. An example of a logic more specific to a dialogue is name calling. A single word like "idiot" would not be a personal attack in any other format, but it is certainly a personal attack when part of a dialogue.

shortpost - a microblogging post, e.g. a tweet.

longform - a long post or an article.

proofread - a post which was proofread. In the proofread posts, the spellchecking is switched off.

alias - a nickname in an online community.

search - a search query. Search queries may not always be grammatically correct. Certain topics and items, that we may otherwise let pass, are tagged with the search format.

disable_spellcheck (boolean) - determines whether the automatic spellchecking is to be disabled. Default: false.

lowercasespellcheckonly (boolean) - determines whether the automatic spellchecking is only to be applied to words in lowercase. Default: false

mingenericfrequency (int) - allows excluding more esoteric terms; the valid values are 0 thru 10.

subscope (boolean) - enables sub-scope parsing, for scenarios like hashtag, URL parsing, and obfuscated content (e.g. ihateyou). Default: false.

domainfactors (set of pairs made of strings and numbers) - provides a session-scope cues for the domains of discourse. This is a powerful tool that allows tailoring the result based on the use case. The format is, family ID of the domain as a key and the multiplication factor as a value (e.g. "12345": 5.0). For example, when processing text looking for criminal activity, we may want to set domains relevant to drugs, firearms, crime, higher: "domainfactors": {"31058": 5.0, "45220": 5.0, "14112": 5.0, "14509": 3.0, "28309": 5.0, "43220": 5.0, "34581": 5.0}. The same device can be used to eliminate noise coming from domains we know are irrelevant by setting the factor to a value lower than 1.

when (date string, format YYYY-MM-DD) - indicates when the utterance was uttered. (TO BE IMPLEMENTED) The purpose is to prune word senses that were not available at a particular point in time. For example, the words troll, mail, and post had nothing to do with the Internet 300 years ago because there was no Internet, and so in a text that was written hundreds of years ago, we should ignore the word senses that emerged only recently.

Output Customization

abuse (boolean) - output instances of abusive content (default: true)

sentiment (boolean) - output sentiment-bearing snippets (default: true)

document_sentiment (boolean) - output document-level sentiment (default: false)

entities (boolean) - output entities (default: true)

topics (boolean) - output topics (default: true), with two more relevant settings:

topic_stats (boolean) - include coverage statistics in the topic output (default: false). When set, the topic is an object containing the attributes topic (string) and coverage (floating-point number). The coverage indicates a share of sentences touching the topic among all the sentences.

optimize_topics (boolean) - if true, the less specific topics are removed if they are parts of the more specific topics. For example, when the topic is cryptocurrency, the optimization removes finance.

words (boolean) - output the lexical chunks / words for every sentence (default: false). In languages without white spaces (Chinese, Japanese, Thai), the tokens are tokenized words. In languages with compounds (e.g. German, Dutch, Norwegian), the compounds are split.

fetch_definitions (boolean) - include definitions of the words in the output (default: false). Only relevant when the words setting is true

parses (boolean) - output parse forests of phrases

deterministic (boolean) - whether the n-best senses and n-best parses are to be output in addition to the detected sense. If true, only the detected sense will be output. Default: true

snippets (boolean) - include the text snippets in the abuse, sentiment, and entities sections (default: false)

explain (boolean) - if true, a reasoning for the abuse and sentiment snippets is provided when possible (see the explanation attribute)

Standards and Formats

feature_standard (string) - determines the standard used to output the features (grammar, style, semantics) in the response object. The standards we support are:

ud: Universal Dependencies tags (default)

penn: Penn treebank tags

native: Tisane native feature codes

description: Tisane native feature descriptions

Only the native Tisane standards (codes and descriptions) support style and semantic features.

topic_standard (string) - determines the standard used to output the topics in the response object. The standards we support are:

iptc_code - IPTC topic taxonomy code

iptc_description - IPTC topic taxonomy description

iab_code - IAB topic taxonomy code

iab_description - IAB topic taxonomy description

native - Tisane domain description, coming from the family description (default)

sentimentanalysistype (string) - (RESERVED) the type of the sentiment analysis strategy. The values are:

productsandservices - most common sentiment analysis of products and services

entity - sentiment analysis with entities as targets

creativecontentreview - reviews of creative content

political_essay - political essays

Signal to Noise Ranking

When we're studying a bunch of posts commenting on an issue or an article, we may want to prioritize the ones more relevant to the topic, and containing more reason and logic than emotion. This is what the signal to noise ranking is meant to achieve.

The signal to noise ranking is made of two parts:

Determine the most relevant concepts. This part may be omitted, depending on the use case scenario (e.g. we want to track posts most relevant to a particular set of issues).

Rank the actual post in relevance to these concepts.

To determine the most relevant concepts, we need to analyze the headline or the article itself. The headline is usually enough. We need two additional settings:

keyword_features (an object of strings with string values) - determines the features to look for in a word. When such a feature is found, the family ID is added to the set of potentially relevant family IDs.

stophypernyms (an array of integers) - if a potentially relevant family ID has a hypernym listed in this setting, it will not be considered. For example, we extracted a set of nouns from the headline, but we may not be interested in abstractions or feelings. E.g. from a headline like Fear and Loathing in Las Vegas_ we want Las Vegas only. Optional.

If keyword_features is provided in the settings, the response will have a special attribute, relevant, containing a set of family IDs.

At the second stage, when ranking the actual posts or comments for relevance, this array is to be supplied among the settings. The ranking is boosted when the domain, the hypernyms, or the families related to those in the relevant array are mentioned, when negative and positive sentiment is linked to aspects, and penalized when the negativity is not linked to aspects, or abuse of any kind is found. The latter consideration may be disabled, e.g. when we are looking for specific criminal content. When the abusenotnoise parameter is specified and set to true, the abuse is not penalized by the ranking calculations.

To sum it up, in order to calculate the signal to noise ranking:

Analyze the headline with keywordfeatures and, optionally, stophypernyms in the settings. Obtain the relevant attribute.

When analyzing the posts or the comments, specify the relevant attribute obtained in step 1.

Other APIs in the same category

VisibleThread API

The VisibleThread b API provides services for analyzing/searching documents and web pages.

To use the service you need an API key.

Contact us at [email protected] to request an API key.

The services are split into Documents and Webscans.

Documents

Upload documents and dictionaries so you can :

Measure the readability of your document

search a document for all terms from a dictionary

retrieve all paragraphs from a document or only matching paragraphs

Webscans

Analyze web pages so you can:

Measure the readability of your web content

Identify & highlight content issues e.g. long sentences, passive voice

The VisibleThread API allows you to programatially submit webpage urls to be scanned,

check on the results of a scan, and view a list of previous scans you have performed.

The VisibleThread API is a HTTP-based JSON API, accessible at https://api.visiblethread.com

Each request to the service requires your API key to be successful.

Getting Started With Webscans

Steps:

Enter your API key above and hit Explore.

Run a new scan by submitting a POST to /webscans (title and some webUrls are required).

The scan runs asynchronously in the background but returns immediately with a JSON response containing an "id" that represents your scan.

Check on the status of a scan by submitting GET /webscans/{scanId}, if the scan is still in progress it will return a HTTP 503. If

it is complete it will return a HTTP 200 with the appropriate JSON outlining the urls scanned and the summary statistics for each url.

Retrieve all your previous scan results by submitting GET /webscans.

Retrieve detailed results for a url within a scan (readability, long sentence and passive language instances) by submitting

GET /webscans/{scanId}/webUrls/{urlId} (scanId and urlId are required)

Getting Started With Document scans:

Steps:

Enter your API key above and hit Explore

Run a new scan by submitting a POST to /documents (document required). The scan runs asynchronously in the background but returns

immediately with a JSON response containins an "id" that represents your scan

Check on the status of a scan by submitting GET /documents/{scanId}, if the scan is still in progress it will return a HTTP 503. If

it is complete it will return a HTTP 200 with the appropriate JSON outlining the document readability results. It will contain detailed

analysis of each paragraph in the document

Retrieve all your previous scan results by submitting GET /documents

Searching a document for keywords

The VisibleThread API allows you to upload a set of keywords or a 'dictionary'. You can then perform a search of a already uploaded document

using that dictionary

Steps (Assuming you have uploaded your document using the steps above):

Upload a csv file to use as a keyword dictionary by submitting a POST to /dictionaries (csv file required). This returns a JSON

response with the dictionary Id

Search a document with the dictionary by submitting a POST to /searches (document id and dictionary id required).

Get the resuhlts of the search by submitting **GET /searches/{docId}/{dictionaryId}" . This will return JSON response containing

detailed results of searching the document using the dictionary.

To view the list of all searches you have performed submit a GET /searches.

Below is a list of the available API endpoints, documentation & a form to try out each operation.

Fun Generators API

PDF Blocks API

Poemist API

Exude API Service

Random Lottery Number generator API

DynamicDocs

The template files are stored in your dashboard and can be edited, tested and published online. Document templates can contain dynamic text using logic statements, include tables stretching multiple pages and show great-looking charts based on the underlying data. LaTeX creates crisp, high-quality documents where every detail is well-positioned and styled.

Integrate with ADVICEment DynamicDocs API in minutes and start creating beautiful dynamic PDF documents for your needs.

For more information, visit DynamicDocs API Home page.

Football Prediction API

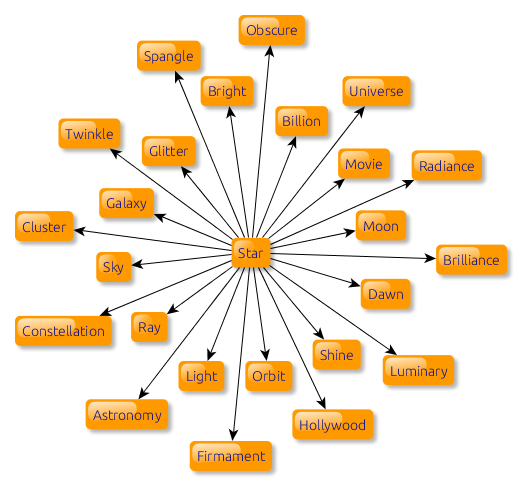

Word Associations API